Exhibition 1_Group 27

| Team number |  Group 27 Group 27 |

|---|---|

| Students |  Mar?a Peir? Torralba Mar?a Peir? Torralba Marinka RoestMeike HuismanRom?e Postma Marinka RoestMeike HuismanRom?e Postma |

| Coach | Dieter Vandoren |

| Brief | DCODE |

| Keywords | EthicsExplorationResilienceSpeculative |

| Exhibition link | |

| Status | Finished |

| One liner! | How resilient are you?! |

| Link to video |

Thoughts to begin with

Throughout the course of the past three weeks, our design goal has emerged: helping people become more resilient in a future in which AI will play a big role. However, our first explorations were a lot broader, and came from us asking ourselves many questions and having explorative conversations about AI.

Can AIs be taught to forget and therefore forgive, the same way humans do? How do we perceive or imagine AIs to be like? In what ways are we different, and in which similar?

How will we behave when AI becomes a part of our daily life? And can we be resilient then?

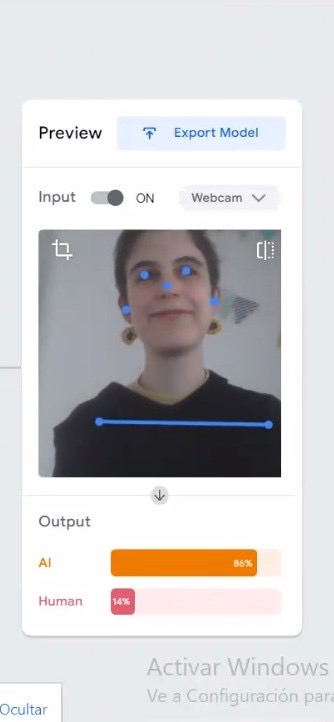

Am I (not) a robot? // Prototype 1

How do humans imagine AI?s?

Prototype + test // To find out how humans imagine AI?s, we asked people in our surroundings (n = 15) to answer some basic questions (1) as an AI-system and (2) as themselves. We recorded the interviews and using the Teachable Machine tool we learned an AI what kind of poses humans acting like an AI make compared to humans being themselves. In the end, the AI could differentiate a human acting like an AI pose from a human being themselves pose!

Quotes // ?AI?s don?t have emotions? ? ?AI?s are smarter than me? ? ?AI?s are not uncertain, they are confident? ? ?AI?s are right about everything?

What we take with us // Humans have a superficial understanding of AIs, in the way that they think AIs are always right.

Yearly Check-(A)In // Prototype 2

What happens when AI?s have more power than humans?

Prototype + test // To find out what happens when AI?s have more power than humans, we imagined a futuristic scenario in which AIs have the power to judge us. In a city hall setting, you visit an AI for your yearly check-in, where the AI judges your behavior within society with a percentage. Our goal was to see how people would react to such a big and invasive judgment by an AI and how far we could go until people didn?t accept the result anymore.

Quotes // ?The test doesn?t make sense? ? ?What is the criteria for my judgement?? ? ?I don?t understand the reasoning, there must be something I don?t understand? ? ?I am so confused?

What we take with us // No one said: ?Maybe the AI is wrong..? > unconsciously, humans choose to trust the output of AI?s, they imagine a story in between the input and output to make it logical for themselves.

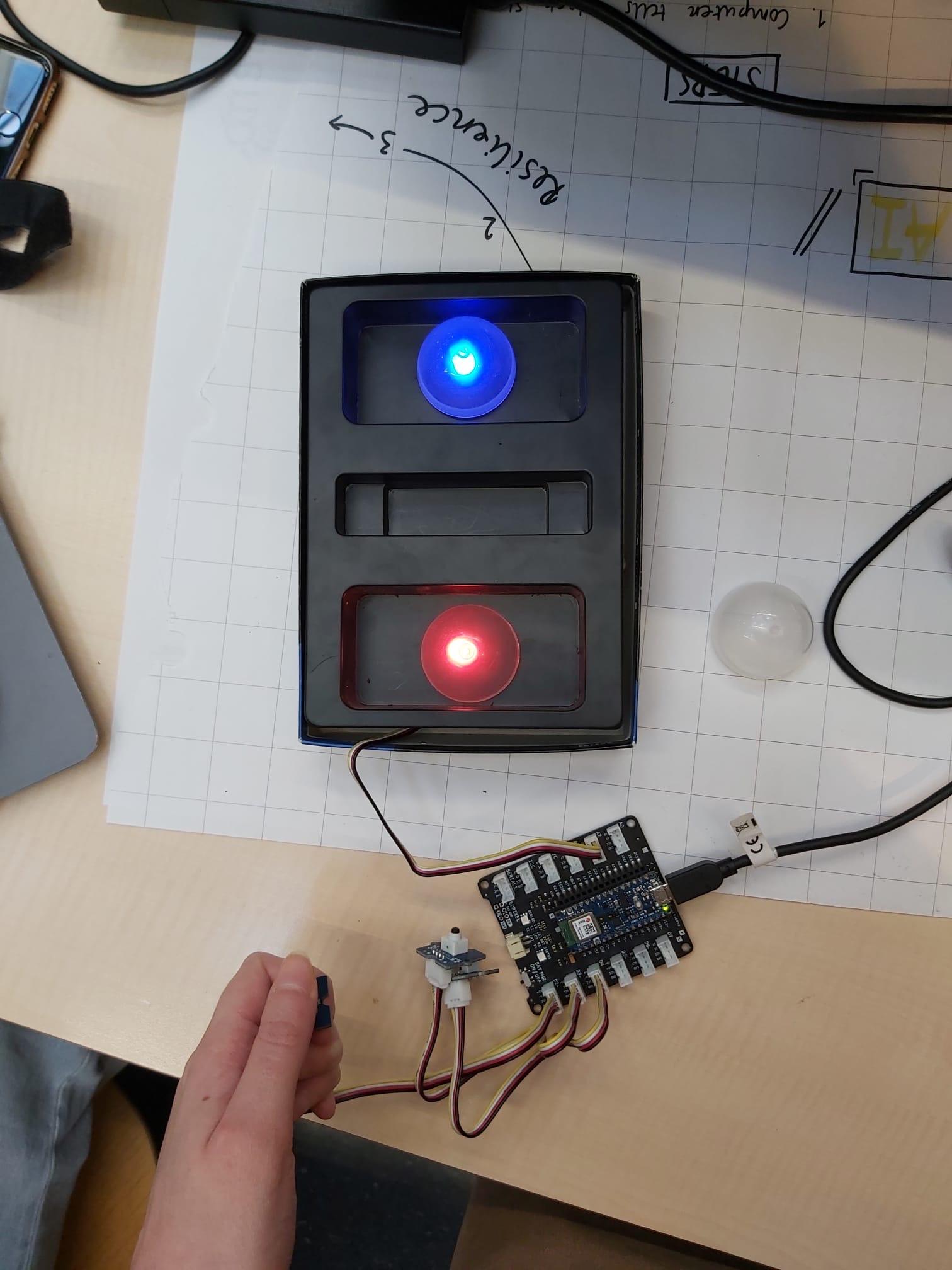

Beat the AI // Prototype 3

What do humans think AI?s are doing in the Black Box?

Prototype + test // To find out what humans think AI?s are doing in the Black Box [the space between data input and output], we set up a guessing game in which people have to play against an AI. We wanted to find out how people imagined the AI playing the game and see their reaction when they found out what actually happened. The AI solved the game in a very different way than our test participants, which surprised them. Afterwards, they weren?t sure whether the AI was playing smart or playing unfair...

Quotes // ?The AI is probably asking questions in a smarter way and much faster? ? ?It is not fair, because they didn?t really think?? ? ?The AI isn?t smarter than me? ? ?That way I could do it as well, but I hadn?t had the time?

What we take with us // Humans can choose if they accept the output of AI?s, if they are aware of the AI?s reasoning behind.

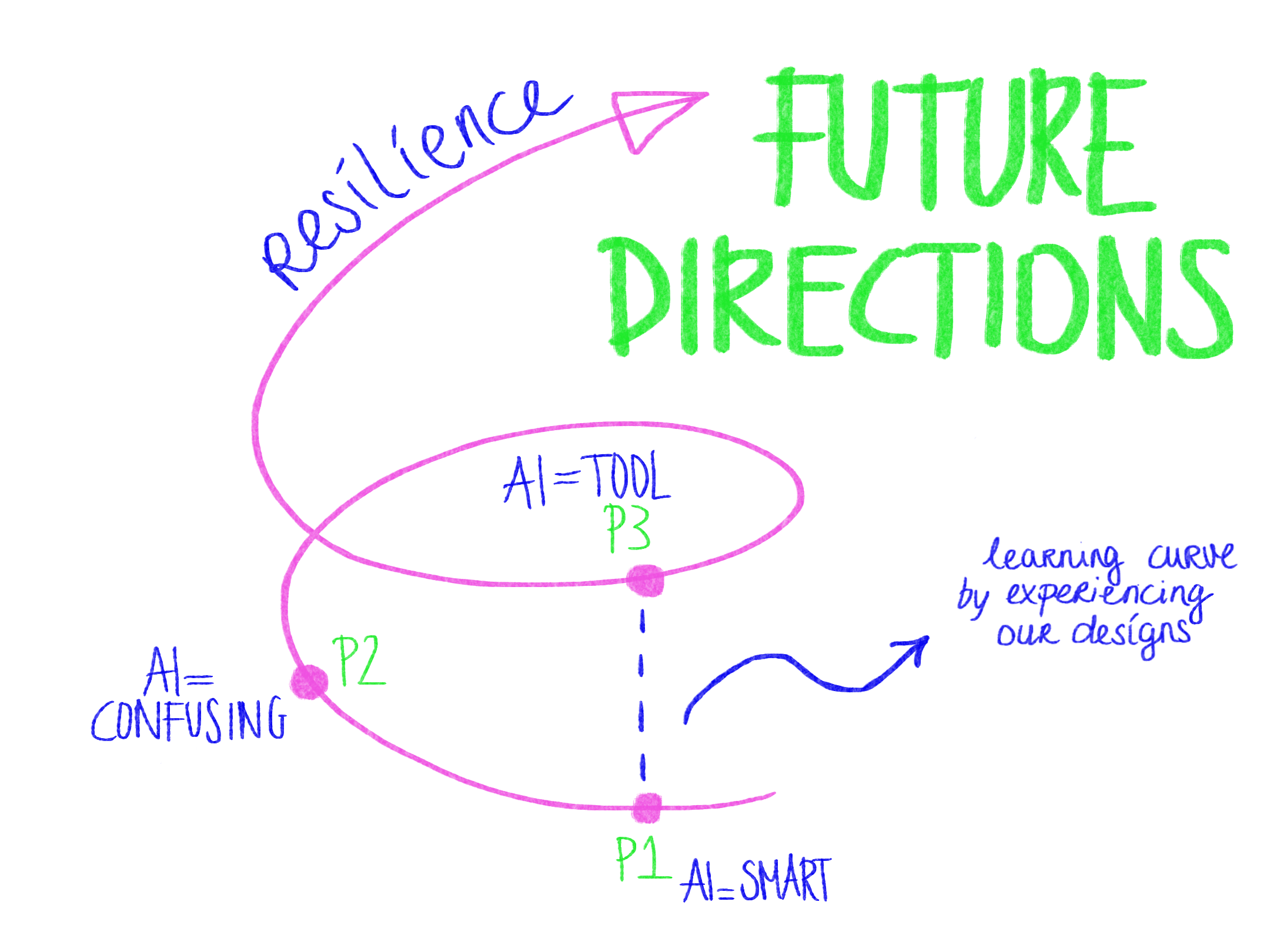

Future directions

For the future of the project, resilience will be an important theme in our further exploration and design. We think it?s important to make people aware and create understanding of AI, in order to be prepared for a world where AI has a larger part to play.

We want to create an experience in which we raise curiosity and enthusiasm about the topic of AI by giving people a peek at the world of AI in a way they haven?t seen before. We want to give them a push to find more information themselves and give them inspiration to become even more resilient. To achieve this, we want to keep our conversation about ?machine unlearning? in mind, as well as the findings of our prototypes in the last few weeks.

We discovered that it?s important to gain insight in people?s reactions and emotions before and after the experience, to see its impact. We want to continue this way of working for the remainder of this project.